Dynamic Visual Meta-Embedding

Dynamic Visual Meta-Embedding

Abstract

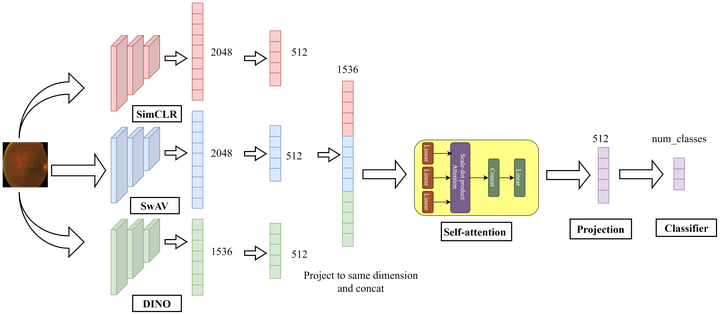

Transfer learning has become a standard practice to mitigate the lack of labeled data in medical classification tasks. Whereas finetuning a downstream task using supervised ImageNet pretrained features is straightforward and extensively investigated in many works, there is little study on the usefulness of self-supervised pretraining. In this paper, we assess the transferability of ImageNet self-supervised pretraining by evaluating the performance of models initialized with pretrained features from three self-supervised techniques (SimCLR, SwAV, and DINO) on selected medical classification tasks.

Type

Publication

In ArXiV

The paper is available here.